Puppet Show is an interface plug-in for Epic’s Unreal Tournament 2004. Puppet Show uses an external Java program to feed visual input from a webcam onto the character animation of customized Unreal game avatars. It adds computer vision as another input device for physical puppeteering of the virtual game characters. Computer vision allows for an intuitive mapping of body movements onto virtual characters and gives human actors better access to an expressive virtual performance. We argue that this exemplifies an interface trend towards more expressive input options that support a higher level of expression in video games.

PuppetShow was built with Processing and runs within the Unreal Runtime using a custom gametype. PuppetShow can track up to three unique colors and relay there transformations onto a 3D character. The puppet show interface is intuitive and allows for users to customize how the system modifies the 3D character, be it the affected bone, the range in which it moves, or how the puppet is tracked.

To download the different alpha packages of PuppetShow visit the downloads page. To see puppet show in action, check out the pictures and video below.

Ok, manifesto might be to strong a term.

PuppetShow was created to liberate Machinima actors and expressive gamers from the shackles of the mouse, keyboard, and other abstract middleware. The mouse and keyboard cannot project the human figure’s true expressive range into a virtual environment intuitively. To do so, an interface must be able to understand motions from the whole human body, not just complex motions on a plane (mouse) or keystrokes.

PuppetShow’s goal is to provide a simple, open-source, expressive interface for the Mahinima and expressive games community. It is the hopes creators that it will allow for more expressive interactive experiences to be forged. Intuitive and expressive interfaces are the future of human scale computing. PuppetShow is gimlpse of that future.

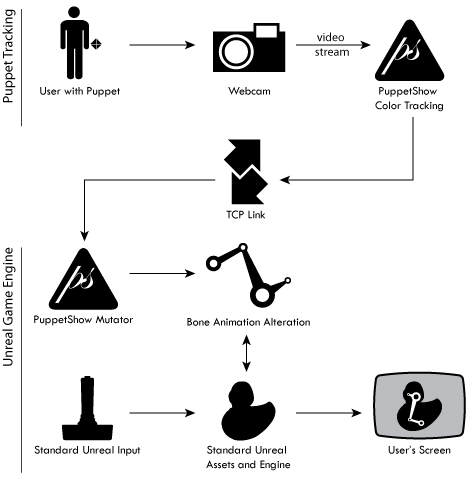

PuppetShow in truth is actually two separate systems that communicate over a TCP link: the puppet tracker and the Unreal puppet animation system. Below is the overall flow diagram of how PuppetShow works.

Puppet tracking is accomplished by using a puppet, a webcam, and the PuppetShow color tracking applet. The color tracking software uses a refined blob tracking algorithm to track solid colors on a puppet. Since the tracking is based solely on color almost anything can act as a puppet: origami, gloves, socks, shirts, pineapples and so forth! The tracking color can be set easily with the dynamic color calibration system. Based on a user defined scheme, the tracking software then takes the blobs and translates them into usable information which is subsequently sent to the Unreal Runtime running the PuppetShow animation system.

The PuppetShow gametype takes in values from the color tracker and translates them into bone translations and rotations. These are then applied to the character controlled 3D mesh (in the above diagram: a ducky!) which is subsequently displayed on the screen. An important note: PuppetShow does not interfere with the standard input devices of Unreal. This allows players to move and manipulate their characters as they would usually be able to with the added PuppetShow animations.

That's it in a nutshell! Please see the faq for step by step usage instructions.

The current system at work:

The original character demo video:

And some images of the system being used!