With the advent of virtual reality, or VR, video game hardware platforms such as the Oculus Rift and the Vive, we find that video game design is at a crossroads. It must now find ways to play with it. This project proposes puppetry controls mechanisms to advance game design for VR.

A Bridge Between Bodies is a Master’s Project being carried out at Georgia Tech’s Digital Media program by Pierce McBride, with Michael Nitsche serving has his advisor and committee chair. Jay Bolter and Ian Bogost make up the other two members of his committee.

Although VR as a technology has been experimented with in various academic labs since the early 1980s, the recent resurgence in VR technology has so far been the most public-facing push for the medium. As of the time of writing, two platforms have grown out of early prototypes and achieved what is arguably the widest distribution of VR technology yet seen: the Oculus Rift and the HTC Vive.

Notably, both of these platforms now utilize a similar stack of hardware interfaces, which include two motion-based handheld controllers and a head-mounted display also tracked within physical 3D space. These hardware interfaces lead to what Marco Gillies describes as “Movement Interaction”, or interaction design based up physical motion of the body. This type of interaction design, coupled with the fact that VR utilizes a head mounted display, has lead the majority of VR video games to rely on the first person perspective. In addition, those video games that do break from the norm and play with a 3rd person perspective don’t use the motion-based controllers. This suggests to us a question, and a problem.

There are very few third person VR video games, all of which do not use movement interactions as input. What could a third person VR video game play like?

This is where I see puppetry as a solution. Puppetry already contends with the question of how to control other characters using motion. Puppetry has what I would describe as physical interfaces, in the form of handles and rods, that map the movement of the puppeteer to the puppet. I only need to reinterpret existing forms of puppetry and apply that design to VR interfaces.

Furthermore, I previously worked on an NEH funded project titled Archiving Performative Objects which worked on a similar problem with puppets in VR. In that case, the purpose was archival, but I could share assets and code between the two and speed up development.

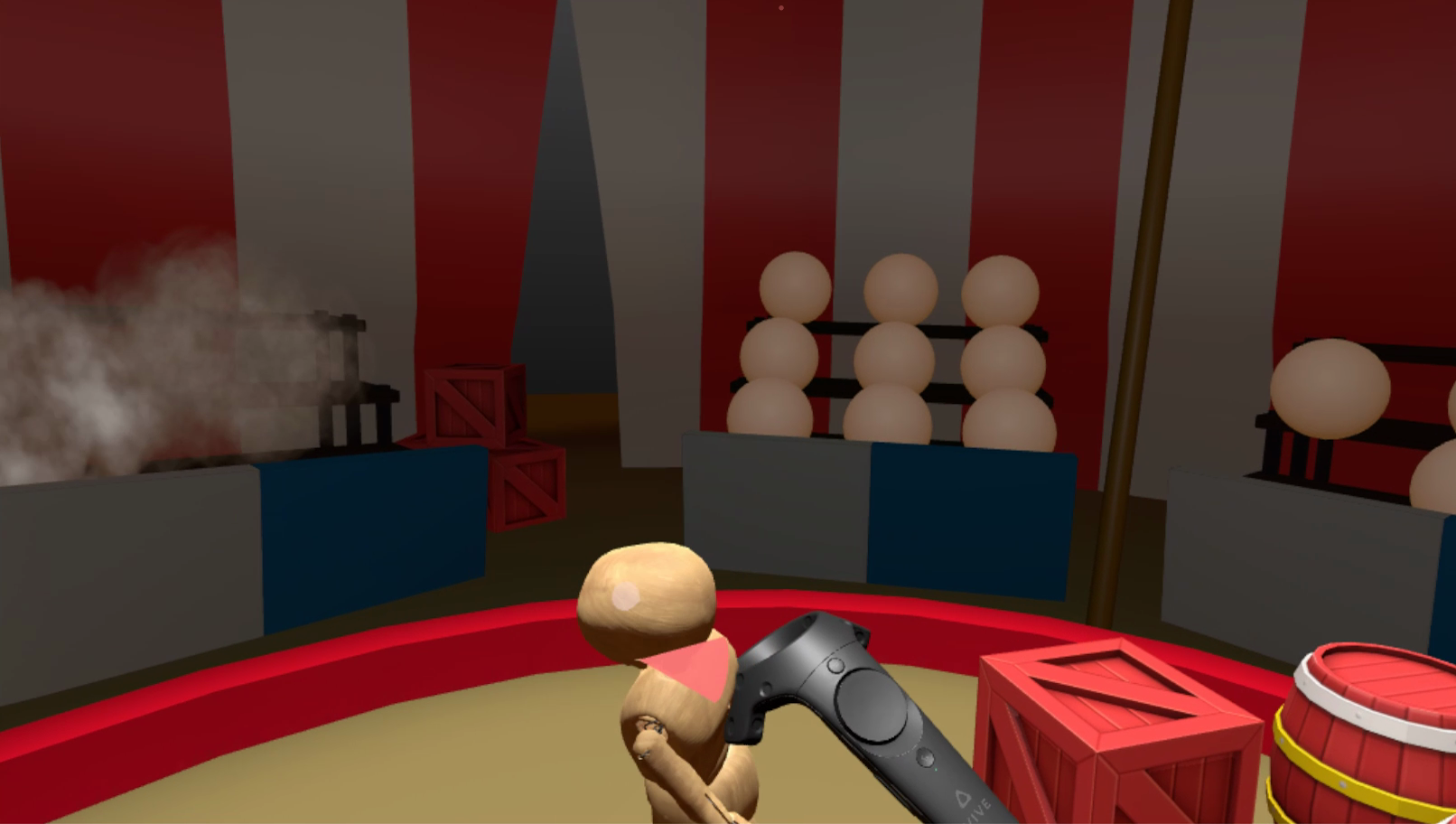

The project is a video game prototype made in Unity for the Oculus and Vive. In the game, the player controls three acts of a circus. Each act features a different puppet, with a different interface based off a form of puppetry and a different circus act. The player’s goal is to keep the audience excited by performing each puppet’s act.

The three puppets/acts are outlined below.

| Strongman A bunraku-based puppet designed after cartoonish circus weightlifters |

|

| Balance Beam A rod-based puppet designed after tightrope and high wire acts |

|

| Juggler A marionette-based puppet designed after juggling and balance acts |

|

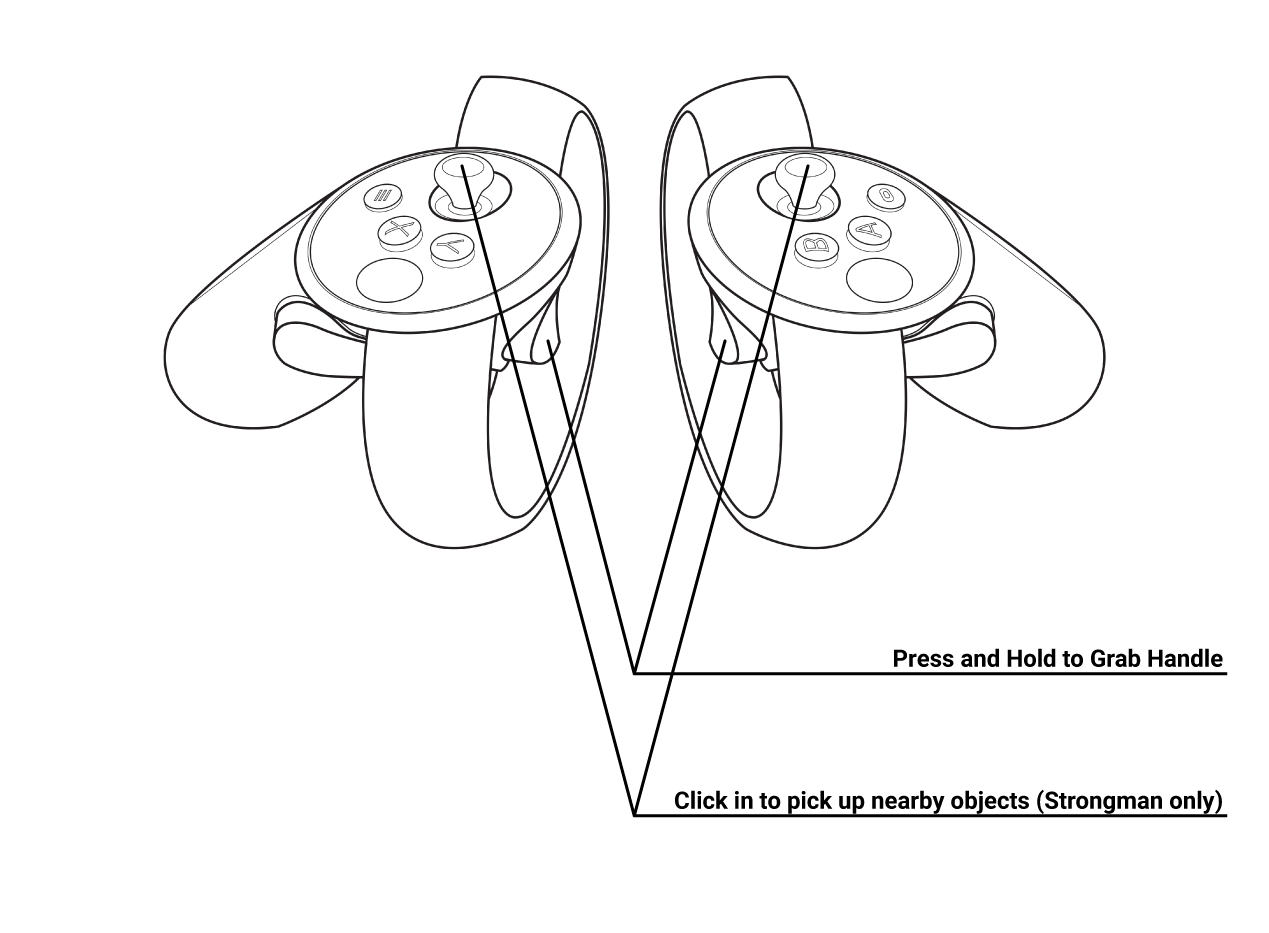

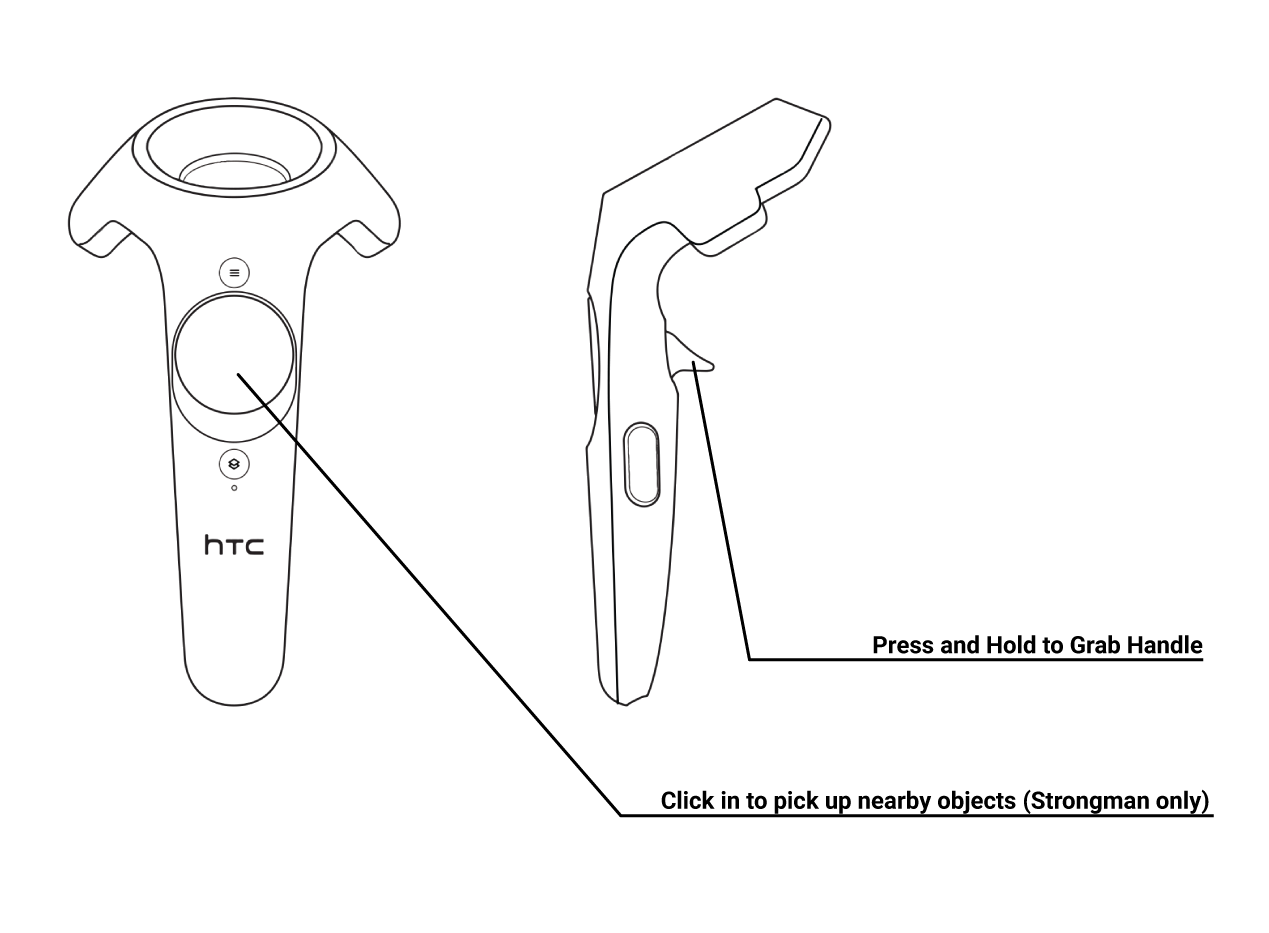

The player controls each of these puppets using the motion controllers, and nearly all the controls are encapsulated in just one button on each controller.

The project’s design process began in January 2018 and started with a sketching and brainstorming phase. During this phase I moved through a few different ideas, like a fake zoo where the player needs to trick people into thinking the animals are real and a yoga class where the player needs to give everyone a workout without straining the less physically fit students. However, I landed on the circus idea because it aligned with our requirement for a game that

This design led into a rapid prototyping phase, where I built out individual features and technical solutions week by week. Our first prototypes focused on the technical and design requirements for the puppets, and were quite simple. The prototype on the right, for example, was an early test of the technical implementation of our physics tracker system and the strongman puppet design.

These early prototype also solidified the core interaction design, which was the use of the controllers as surrogate hands. Each controller can grab any handle by pressing and holding down on the trigger in the back. I liked this approach because it aligned with Gillies’s object manipulation type of movement interaction and it aligned with the standard input convention of the medium.

Over time, I moved onto interface prototypes. By this point, I had done some informal critiques and realized that a consistent design language for the handles, or the objects players grab to manipulate the puppets, would be necessary. Some of my first versions used simple shapes that change color when touched.

I liked the use of color but I found the floating shapes confusing, as they were not visible distinct from the rest of the environment. I tried another approach using 2D shapes indicating handles instead.

This approach failed because it didn’t show the orientation of the handle, only the position. Because the handles must influence both to left players directly manipulate the puppets, I couldn’t scrap that requirement, so I threw away this prototype. However, I liked the way the 2D interface was clearly distinct from the 3D environment through rendering, and I wondered if rendering could do something similar to 3D objects.

This thought led to the final design, where the handles are 3D objects, but are rendered one solid color and “on top” of all other objects.

The last portion of the prototype I worked on was the game structure, or the rules of the game and not just how one plays. The game structure unfortunately came late in the process, and went through a few interactions but never came together into a full-fledged video game.

The core idea behind this video game was based around the simple premise of a crowd, but I needed a way to show clear feedback based on the enthusiasm of the crowd. One of our first versions used a meter above each puppet to indicate to the player that they need to play with different puppets to keep the audience interested in each of them.

However, I found that this idea didn’t work in practice. 2D interfaces in VR are already a tricky design choice, but in our case players I showed this too focused all their attention on the puppet and not at all on the meter, primarily because both were not visible at once.

I found this to be interesting, but couldn’t stick with this design. Given that the issue was an “out of sight, out of mind” type of problem, I thought about how the same kind of information could be conveyed in a different form. This is where I got the idea to use sound, and audience cheering.

The final prototype uses a single metric, which increases based on the player’s use of any of the puppets. The audience gives feedback by increasing the size of the crowd and the volume of the audience cheering. The crowd size is clearly a visual cue, but crowd sound is something a player could be cognizant of regardless of what they were looking at.

I did a pilot IRB study of our prototype at this point, and here I was hit with feedback about the lack of a goal. Again, I somewhat expected this given that the game structure was the last portion of my design I worked on, but it’s unfortunate nonetheless. However, I did receive positive feedback about the interface itself. Given the length of time I had to work on the project and the kind of technical problems I needed to solve, I still found this feedback quite invigorating.

The physics system I developed for this project existed to solve a particular problem; how to manipulate a single ragdoll with two hands at once.

At face value, this problem doesn’t suggest a significant hurdle. However, do a little development and you’ll find the first/most obvious solution (use regular joint constraints) causes this…

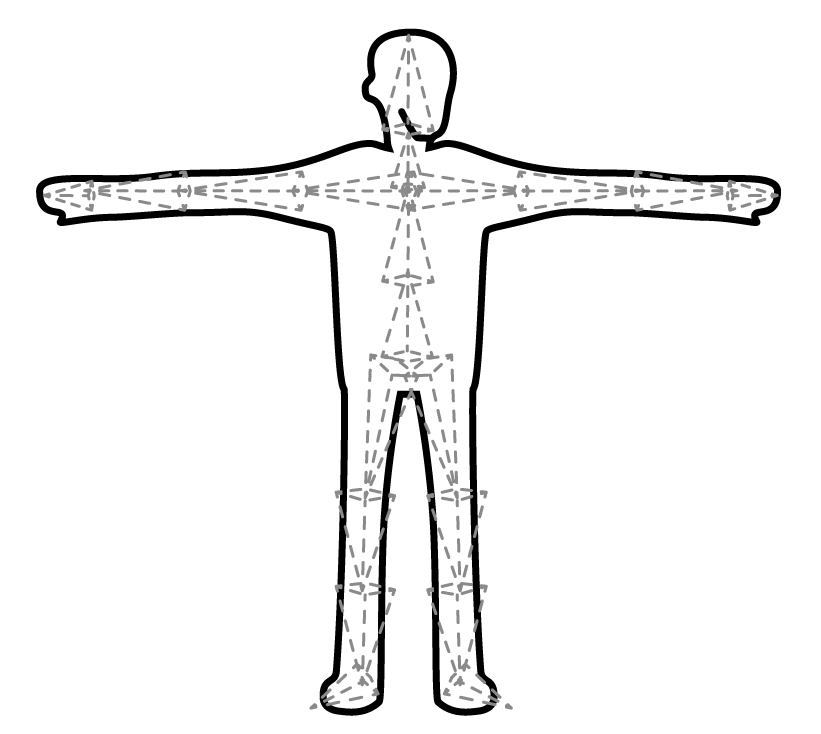

This issue is the result of the way ragdolls work in games, or more specifically the way joint constraints and rigs work. 3D characters are animated using rigs which look like this.

These rigs are made up of bones, which act like our actual bones. They bend and rotate like real bones, but when pulled apart they become unstable, like the GIF above.

To solve this problem we developed a dual-control system where the skeleton position and orientation is based on the location of targets and handles. Targets are invisible objects that the game controls the position and orientation of, while handles are visible objects the player can grab. Both constantly update the position and orientation of a specified part of the puppet’s skeleton, which in turn affects the 3D model.

The diagram on the right, for example is the balance beam puppet. The entire upper body stands upright without player input, but the player controls the position of the entire puppet through the manipulation of the feet.

The actual tracking is based on a PID controller, which is an algorithm used in industrial design and robotics. It’s used to modulate a variable based on target value and the error between the current and target value. Applied to the x, y, and z value of the puppet’s position and the w, x, y, and z of the orientation lets the puppet iteratively reach the target using Unity’s physics engine.

I found this breakthrough to be the most valuable aspect of this project, as it provides a reliable way for me to create puppets, tweak their responsiveness and apply tuning to different puppets quickly.

Given the lack of similar projects in the game research and design research fields, I see ample opportunity to expand upon this work. The technical solution I devised could potentially be useful way as a way to approach mapping movement interactions to physically simulated objects in VR. Because the solution does not rely on constraints, its far less prone to instability and might offer technical methods to designers seeking to create more nuanced touch interactions in VR.

I also see this is a first step in the development of a viable approach to new interaction design methods in VR. Given the current landscape, experimentation with the kinds of games and mechanics should be welcome and could potentially attract new audiences.

Speaking for myself, this project was more than enough work for a Master’s degree from Georgia Institute of Technology. However, I intend on continuing with this work on my own time with the hope of eventually releasing either the technical solutions as independent packages for other developers or a full-fledged VR game. Updates on my current work will be posted on my twitter, and if this description interests you please feel free to reach out or download an executable from Github.