Full Stage Multiplayer Theremin

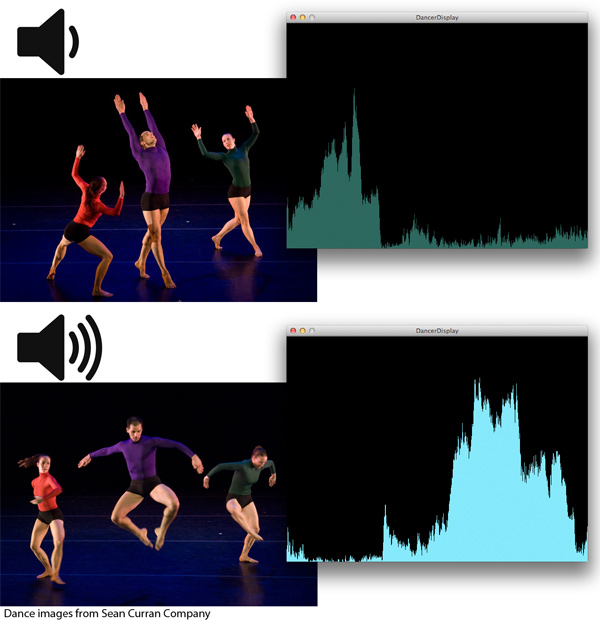

October 10th, 2012 By FL-116301. Set up Processing application that maps sound pitch, volume, pan, and timing to motion detection (video camera delta will work for this).

2. Point the camera at the performance.

3. Start the Processing application.

4. Offer the resulting real-time audio as a new way to experience the show’s fast and slow bursts, follow shifts of energy locations on-stage, and types of movements by dancers.

Iteration would be required to achieve the types of tones and timings desired by the team. The present pre-alpha version of the software is for demonstration purposes only, and at this time mostly reflects that tone, pitch, and amplitude can be made a function of total motion detected (frame differences) within different areas of the camera.

Experimentation with how to “play” any given motion-to-audio mapping could promote different types of exploratory movements. In addition to providing an optional audio dimension to the movements, conceivably with enough improvement this design could provide a way for visitors with severe vision disabilities to enjoy the pacing and stage action of the performance – roughly similar in principle to the aquarium research across the hall from DWIG.