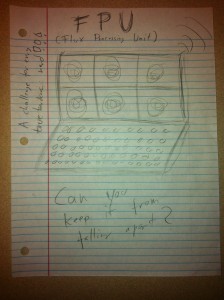

Anti-Deskilling – Improved Electronic kit

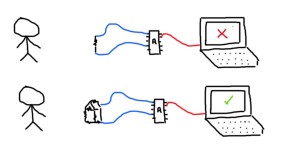

January 29th, 2013 by PhillipeGoogle, Wikipedia, Instructables… We tend to use our computer as a magic oracle that knows everything. By doing so, we may tend to give

it too much trust, while loosing a part of our critical thinking, and passively accetping its all mighty knowledge.

I propose to redesign a random electronic kit, but pretty badly prepared : no instruction, missing resistors or too many of them. To

counterbalance these complications, I suggest a radical approach : to empower even more the computer. It knows the instructions to build

the kit, but you need to convince it you’re worthy enough to get the next instruction by showing your technical skills, their improvement

and by building your intuitive understanding of the materials you are using.

Various levels of complexity / difficulty / degree of interaction can be used depending on the user, its level, etc.

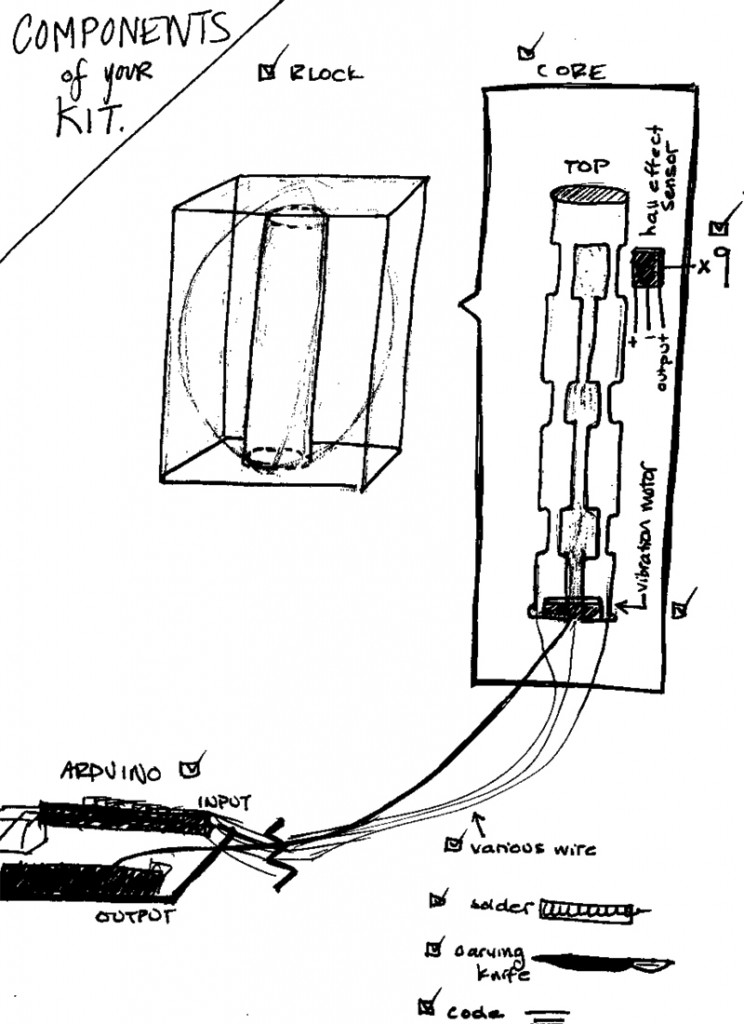

Set content

– A good part of the componenets required for the kit

– “Useless” extra components

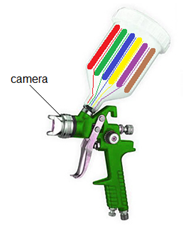

– Prebuild arduino board for measuring resitance/capacitance/inductance

– Software

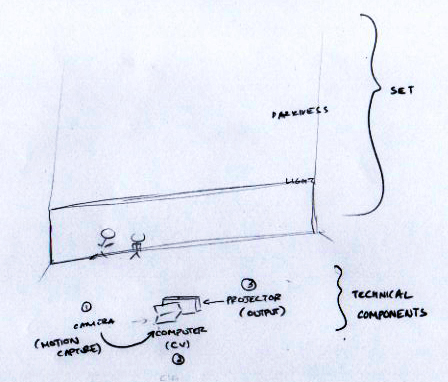

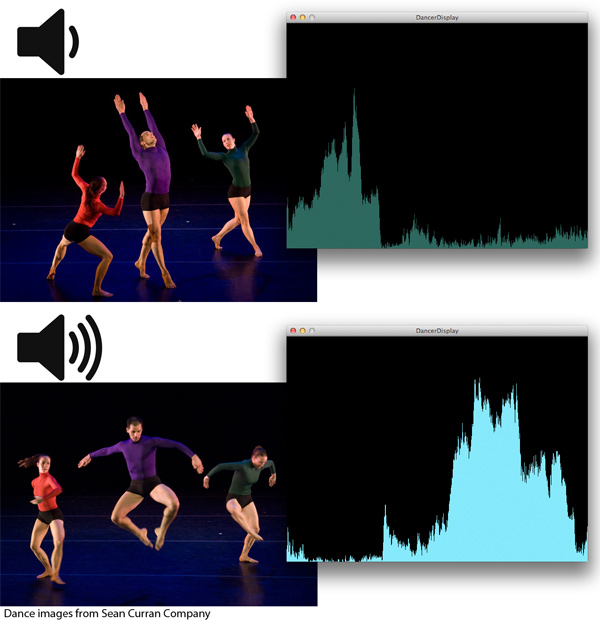

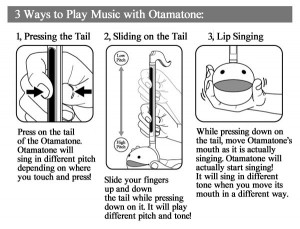

Technical implementation / Interaction description

The prebuild arduino board should be used as a cheap multimeter that can be interfaced with the custom software.

The custom software will first prompt the user with some clear instructions on how to start soldering the kit.

Quickly, the user will reach a point where a component needed is not present as if, or even worse, the computer wont ask for a precise

component, but instead will only give hints of what is needed : a bigger resistance, a smaller inductance … The user will have then to

“build” the component himself by assembling parts from the “useless” extra components, and use the arduino board to ask the computer if

he’s getting closer of what’s needed.

The user is free to use part from outside the kit to achieve the goal. He might try with everyday life objects : piece of copper,

graphite, conductive ink, aluminum.

Discussion

I can see a couple of interesting reasons of building the kit this way. First, the user will gain an intuitive and informal knowledge of

the material he can use. Not only he can assmemble new pieces in a creative way, but there is also a new learning curve, for using various

parts (electronic, or not) in an unconventional manner. This idea is closer to the intimate knowledge of the material used by the craftman

versus a cold and mathematical count of colorful stripes on a resistor.

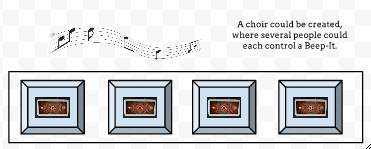

There are other learning paths for the user who doesn’t want to follow blindly the all-mighty computer : you can either improve your

knowledge of the inner working of the kit you’re building, so that you break free from the instructions all-together, or on the opposite

side of the spectrum, improve your knowledge of the inner working of the arduino/softaware tool we propose, and defeat it by building a new

tool that would go through all the expected values and therefore to unlock all the instructions.

In any case, the user must be more creative than if he were following a classical instruction manual, and learn from this experience,

which was the intended goal of the kit.

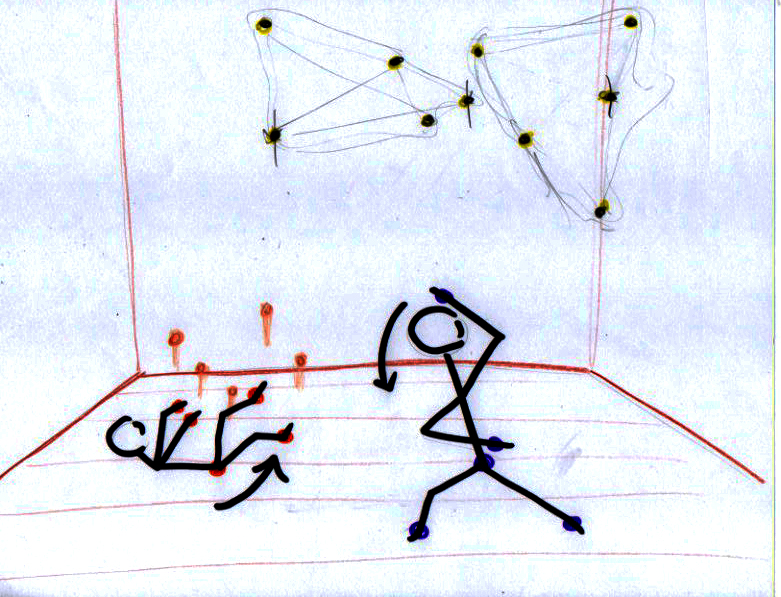

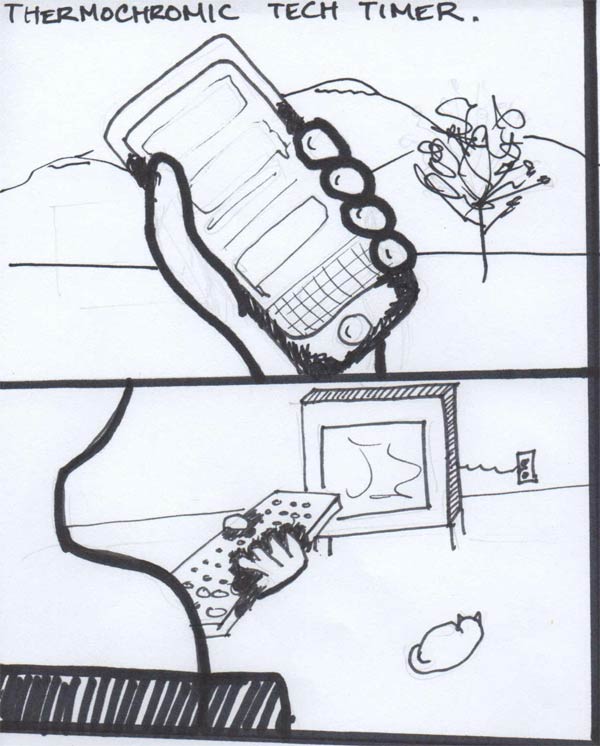

Inspiration and possible examples